Sign up for our free daily newsletter

YOUR PRIVACY - PLEASE READ CAREFULLY DATA PROTECTION STATEMENT

Below we explain how we will communicate with you. We set out how we use your data in our Privacy Policy.

Global City Media, and its associated brands will use the lawful basis of legitimate interests to use

the

contact details you have supplied to contact you regarding our publications, events, training,

reader

research, and other relevant information. We will always give you the option to opt out of our

marketing.

By clicking submit, you confirm that you understand and accept the Terms & Conditions and Privacy Policy

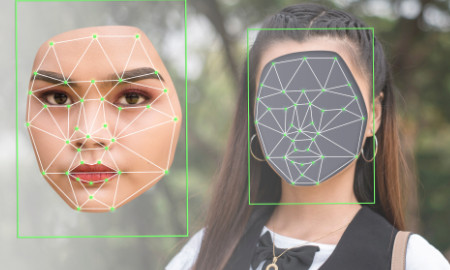

Innovations in artificial intelligence (AI) have made it easier than ever to replicate a person’s name, image and likeness (NIL), particularly if that person is a celebrity. AI algorithms require massive amounts of ‘training data’ – videos, images and soundbites – to create ‘deepfake’ renderings of persona in a way that feels real. The vast amount of training data available for celebrities and public figures make them easy targets. So, how can celebrities protect their NIL from unauthorised AI uses?

The right of publicity

The right of publicity is the primary tool for celebrity NIL protection. The right of publicity protects against unauthorised commercial exploitation of an individual’s persona, from appearance and voice to signature catchphrase. Past right of publicity cases provide some context for how this doctrine could be applied to AI-generated works.

In the 1980s and 1990s, Bette Midler and Tom Waits respectively, successfully sued over the use of sound-a-like musicians in commercial ads. These courts, according to Waits’s case, recognised the ‘right of publicity to control the use of [their] identity as embodied in [their] voice’. Using the same rationale, deepfake ads and endorsements that use AI-technology to replicate a celebrity’s voice or appearance would similarly violate publicity rights.

Such lawsuits are just around the corner. Earlier this year, a finalist on the television show Big Brother filed a class action lawsuit against the developer of Reface, a subscription-based mobile application that allows users to ‘face-swap’ with celebrities. Using traditional principles of right of publicity, the plaintiff is seeking accountability for unauthorised commercial uses of his NIL in the AI-technology space.

The right of publicity is not without limitations. First, because it is governed by state statutory and common law, protections vary by jurisdiction. California’s right of publicity statute, for example, covers the use of a person’s NIL in any manner, while laws in other states only protect against use of NIL in certain contexts.

In 2020, New York expanded its right of publicity laws to specifically prohibit certain deepfake content. Second, the right of publicity specifically applies to commercial uses. The doctrine might stop AI users from profiting from celebrity images in an advertising and sales context, but creative uses – like deepfake memes, parody videos and perhaps even uses of AI-generated NIL in film and television – may fall outside the scope of the right of publicity.

Lanham Act protections

The Lanham Act provides another avenue for addressing unauthorised AI-generated NIL. Section 43(a) of the Lanham Act is aimed at protecting consumers from false and misleading statements or misrepresentations of fact, made in connection with goods and services. Like the right of publicity, courts have applied the Lanham Act in cases involving the unauthorised use of celebrity NIL to falsely suggest that the celebrity in question sponsors or endorses a product or service. For example, the Lanham Act applies to circumstances that imply sponsorship, including the sound-a-like cases referenced above and in cases involving celebrity look-a-likes like White v. Samsung Electronics and Wendt v. Host Int’l Inc.

Under this framework, celebrity plaintiffs may have recourse in the event their NIL is used, for example, in deepfake sponsored social media posts or in digitally altered ad campaigns featuring celebrity lookalikes. And, because the Lanham Act is a federal statute with nationwide applicability, it may offer greater predictability and flexibility to celebrity plaintiffs seeking redress.

Enforcement issues

AI technology also creates unique issues with enforcement and recovery. Because of the wide availability of AI technology, it can be difficult to identify the source of infringing content. Tech-savvy deepfake developers take care to avoid detection. And while deepfake content is most easily shared on social media, social media providers are immunised from liability for certain non-IP tort claims (including right of publicity claims) arising out of user-generated content under Section 230 of the Communications Decency Act.

As AI technology advances, legislators and courts will continue to face new questions about scope of persona rights, the applicability of existing legal protections and the practicality of recovery. While AI-specific regulation may be on the horizon, existing legal frameworks can be mobilised to combat misappropriation at the intersection of celebrity NIL and emergent technology.

Sharoni Finkelstein is a counsel at Venable and the firm's West Coast brand protection lead. Alexandra Kolsky is an associate in the firm's Los Angeles office and advises clients on IP issues, including trademarks, copyrights and patents.

Email your news and story ideas to: [email protected]